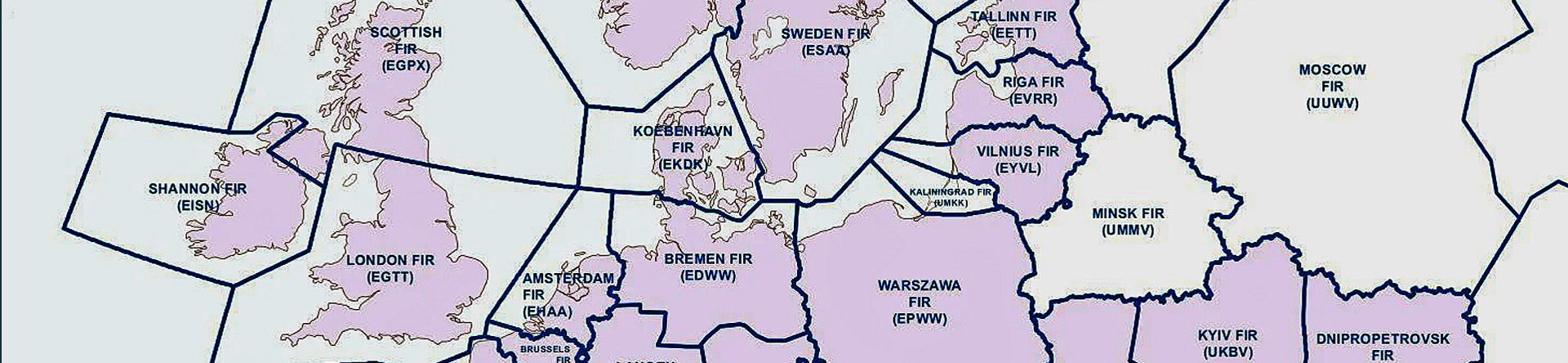

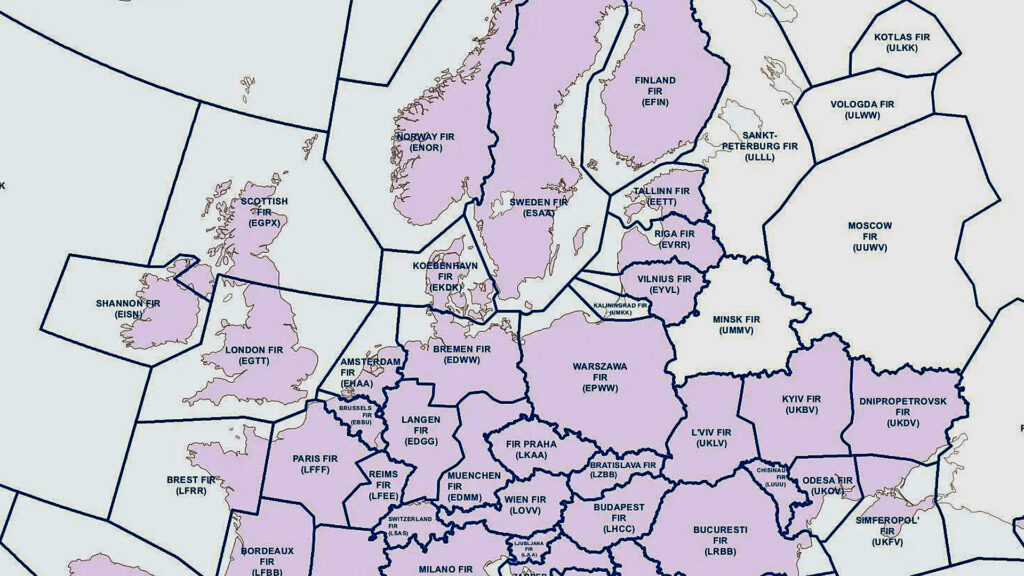

In three previous parts, I have described how we act, often intuitively, to solve problems. It often works well in simple (I used a bike with a flat tire as an example) and complicated systems (I used a car with engine problems as an example) but it may not work as well in complex systems (I used an Air Traffic Control Centre with several losses of separation as an example).

In this, fourth and final part, we will look at other methods for problem-solving, that could be more appropriate for complex systems. I will begin with Dave Snowden. He is the creator of Cynefin, a framework used to aid decision-making. According to Snowden, in complex systems we will not know for sure, what the effect of different actions will be. Every action is an experiment.

That means, we will have to prepare for the fact that actions can give results that are either more positive or more negative than we expected. Thus, we must be prepared for both possibilities. If the result is negative, me must have a possibility to “dampen” these effects, by discontinue or by returning to an earlier stage. If the result is positive, we can move on and perhaps increase.

These ideas resemble the “micro experiments” that Robert de Boer advocates in his new book “Safety Leadership”. One example is the introduction of changes in an aviation maintenance facility, described in a paper that can be found on: https://www.researchgate.net/publication/341728911_Safety_differently_A_case_study_in_an_Aviation_Maintenance-Repair-Overhaul_facility.

One conclusion from Snowden is that traditional, large change projects, are doomed to fail. In a recent post on Twitter, he wrote:

“The single most fundamental error of the last three decades is to try and design an idealised future state rather than working the evolutionary potential of the here and now, the adjacent possibles – it is impossible to gain consensus in the former, easier in the latter.”

For me this is a consequence of the way complex systems work, the lack of predictability for the actions we take in such systems. Still, we continue to hear descriptions of such idealised future states, and of the big projects that will realise them. Apparently, Snowden wants us to rather observe the reality and where it is heading, and to use that understanding to carefully influence that heading and he is using the word “nudging”.

The term “nudging” became popular through a book from 2008; “Nudge: Improving Decisions about Health, Wealth, and Happiness” by Richard H. Thaler (later a Nobel Prize winner) and Cass R. Sunstein. This book describes active engineering of choice architecture, to help people do the choices that are best for them without removing the freedom of choice. If you Google “nudging” you will see many pictures that illustrate how this can be used!

Snowden’s twitter-post with “working the evolutionary potential of the here and now” can seem to touch on another idea for improvements and change, called “appreciative inquiry” (AI). This originates from a 1987 article by David Cooperrider and Suresh Srivastva.

Traditional management of change tends to begin with a problem, the identification of causes to the problem and then the creation of a plan to address the problem. Working with AI means that you start with asking what is already working well. From that foundation you start the creation of a vision of what could be and of what is needed to get there. Instead of focusing on problems, the focus is on what is good and there are many examples of good results from using this method. One example of an organisation that has used AI for improvements is British Airways.

Erik Hollnagel, in his book “Synesis”, seems to agree with Snowden when it comes to avoiding too large change projects. Although he mentions the need for big plans to guide the long-term development, he also says that if big plans result in large projects, there may be difficulties. One of these is the possibility to evaluate. Large projects take time, and since complex systems change all the time, it will be difficult to know whether changes are the effects of the project or by other factors. Therefore, Hollnagel advocates for big plans to be implemented via small steps with individual objectives. Such small steps can be done quicker, and the system can be seen as more stable, and evaluation is easier. Such evaluations are important, as they can be used for the planning of the next small step, but also for the continuous evaluation of the big plan.

Contrary to this, Hollnagel also points at another factor. The effects of change can sometimes take time to show, especially in complex systems. If the evaluation is done too quickly, there is the risk that all effects of the change are not yet visible. Who said managing change in complex systems should be easy?

However, and above all, Hollnagel insists that we cannot use simplified models of our system as a way to understand them. Such shortcuts do not work well. Instead, we must spend more resources on getting to know and understand our system. One of the reasons it is so difficult to predict how a change will affect a complex system, is that we know too little about it. A way forward is using the system “FRAM”, a tool created by Hollnagel. I will not describe it in detail here, but it provides a way to describe a system and how different functions are connected and affect each other. This increases our possibilities to understand how our actions will affect our system, and by getting to know our system better, we can also reduce the gaps between “work-as-imagined” and “work-as-done”.

There is so much more to discuss when it comes to complex systems and how we can improve our way of managing them. However, for now, I decide to stop here and end this fourth and final part.